Deepfake is fake yet realistic audio or visual depiction of action(s) of people which they never performed in reality. It is a form of synthetic media crafted using artificial intelligence (AI). Deepfake software use neural networks with several embedded layers, like Generative Adversarial Networks (GANs), to learn from input data (image, voice, and video) and develop new patterns. GANs often include two types of integrated neural networks: generator, and discriminator. Generator creates fake patterns using input data while discriminator identifies those patterns and share input with generator for further refinement which subsequently create new and more realistic patterns. As a result, both networks train each other in order to produce highly convincing and realistic results.

What began as crude face-swapping experiments has rapidly evolved into highly realistic and difficult-to-detect impersonations. In the political domain, this technology poses a significant threat because if used as a weapon, deepfake can create disinformation, incite unrest, and manipulate elections leading to political chaos and even crisis. Indirectly, it also creates “liar’s dividend” which means that actual evidence can be dismissed as fake thus further eroding the distinction between truth and lie. As deepfakes applications are getting more advanced and more accessible, beside trolling and fun, the risks of social and political crisis are also increasing correspondingly.

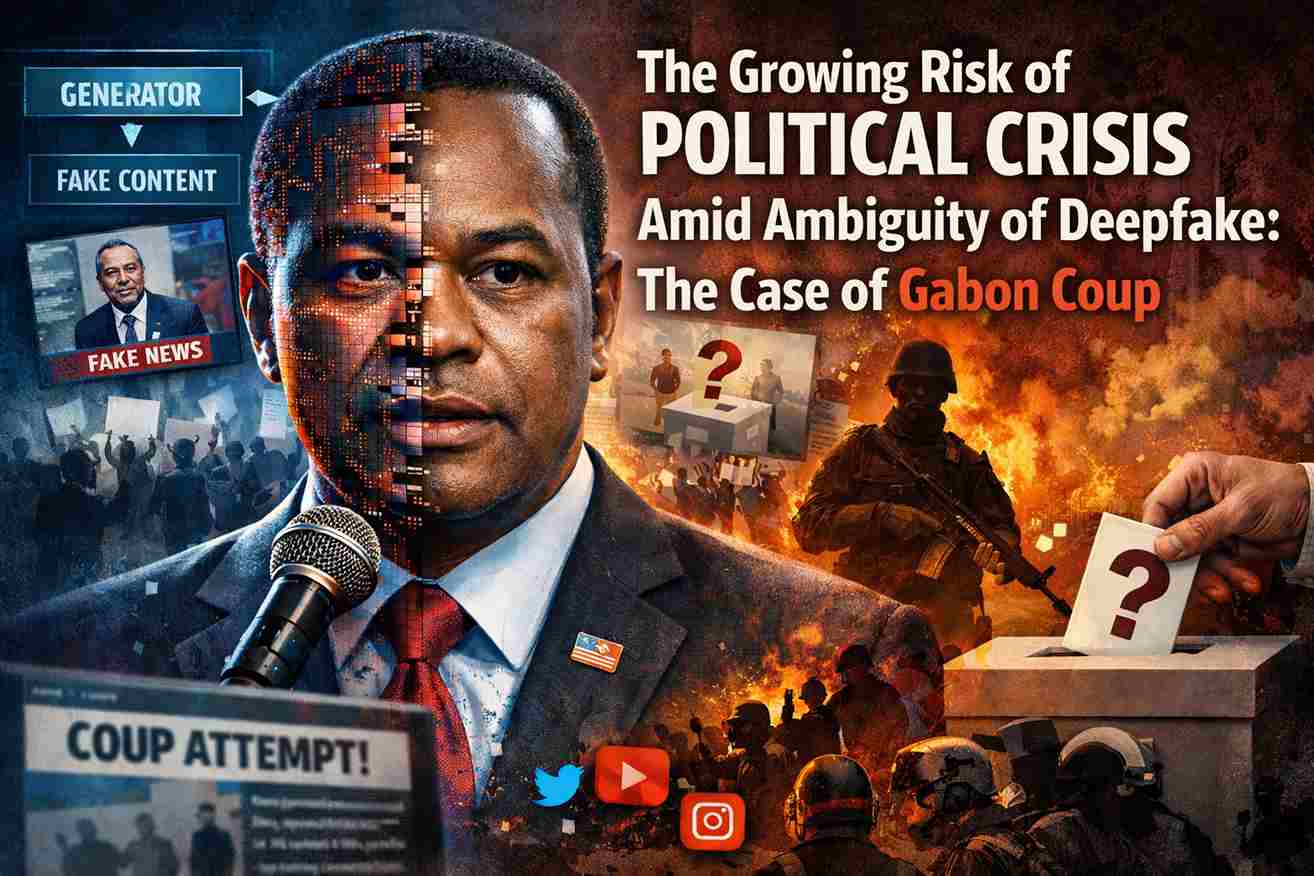

In the political landscape, the case of Gabon’s coup in 2019 is most relevant example of how deepfake can trigger political crisis at national level. Gabon, a small country in West Africa, rarely captures global attention. For decades, this country has been ruled by a single family. Ali Bongo, who inherited power from his father, presides over a system that outwardly resembles a democracy but in practice functions as an authoritarian regime. But in 2016 presidential elections, Ali Bongo faced a serious challenge from opposition candidate Jean Ping who was supported by Gabon public as well as Gabonese diaspora settled abroad. However, despite popular support, Bongo won the election leading to widespread protests. But despite allegations of blatant voter fraud, Bongo remained in power.

This context is important to understanding the subsequent events of 2019, when Ali Bongo reportedly suffered a stroke and disappeared from public view for multiple weeks. Rumors began to spread that Bongo had died. The controversy took a new twist when the Gabonese government officials attempted to dissuade the rumors by releasing a pre-recorded old video of Ali Bongo delivering a speech. Instead of calming the situation, the video sparked further suspicion as Bongo’s speech and movements looked unnatural in the video. This fueled the claims that the video was a deepfake (although it was real but old), deepening the suspicion about death of president, and eventually leading to a coup. Although the coup didn’t last for more than a day, but it highlighted how deepfake led disinformation can trigger political crisis at national level. The chaos that erupted with this video needed a professional to deconstruct what exactly has been going on in the video. But is it possible to even deconstruct all the deepfake videos that roam around social media every day? It has become extremely complex to differential what is real and what is not with the growing popularization of deepfake applications.

This entire quagmire needs proactive deliberation and it has to be tackled not just at individual but at collective level too. At individual level, one has to be informed and cautious about everything shared on social media. Although several fact-finding agencies are operational which verify the authenticity of popular news like iVerify Pakistan in Pakistan, BBC verify and Al Jazeera Sanad Agency. But these platforms or news agencies have delayed response and limited scope. Popular social media platforms, like ‘X’ employ Community Notes as a reactive, crowd-sourced fact finding mechanism that helps to some extent to counter deepfake by flagging the misleading content and mentioning the context of the misleading posts for users. Recently, ‘X’ has started using AI bots as a next evolutionary step for improving the performance of fact finding process of platform against misleading content – including deepfake.

At both national and international levels, countering threat of deepfakes requires a multi-dimensional framework based on combination of technological, legal, and societal initiatives. Technologically, AI-based detection tools can be developed and employed—such as Microsoft’s Video Authenticator and Meta’s deepfake detection challenges—that can spot fabricated audio-visual content by analyzing variability in pixels, lighting, depth, and speech patterns. Legally, several nations are taking steps to criminalize misuses of deepfakes. For example, the United States has proposed the DEEPFAKES Accountability Act, while the European Union’s AI Act classifies deceptive synthetic media as a high-risk application requiring clear identification and subsequent accountability.

At the international level, organizations such as the UN and Europol are treating deepfakes as a transnational security threat, promoting information-sharing mechanisms and common standards for attribution and response, similar to counter cybercrime frameworks. However, technology and laws alone are insufficient; public awareness and cooperation is equally crucial. Moving forward, effective countermeasures should combine mandatory designation of AI-generated content, platform responsibility for takedowns, and crisis communication protocols to prevent deepfakes from triggering political instability or even conflict.

In sum, the emergence of deepfake technology has caused a dangerous shift in this contemporary age of information. If audio and video can be convincingly fabricated, the very fabric of society and political structure can lose credibility and even functionality. Authoritarian regimes may exploit this technology to fabricate legitimacy, while opposition movements may struggle to prove abuses or even grave violations of human rights and international legislations. There is growing need for humanity to realize the gravity of threat that deepfake or similar AI driven technologies pose to human society and undertake comprehensive measures to mitigate such threats.

Table of Contents

ToggleAhmad Ibrahim

Author is Research Associate at Pakistan Navy War College, Lahore.

Shakh e Nabat

Ms. Shakh e Nabat is currently pursuing an M.Phil degree from Quaid-I-Azam University, Islamabad and is working as an intern at Maritime Center of Excellence (MCE), Pakistan Navy War College (PNWC). She can be contacted via email diamohak@gmail.com